“Sanskrit suits the language of computers and those learning artificial intelligence learn it,” Indian Space Research Organisation chairman S. Somanath stated at an occasion in Ujjain on May 25. His was the newest in a line of statements exalting Sanskrit and its worth for computing however with none proof or rationalization.

But past Sanskrit, how are different Indian languages faring in the realm of synthetic intelligence (AI), at a time when its language-based functions have taken the world by storm?

The reply is a combined bag. There is a few passive discrimination at the same time as the languages’ fates are buoyed by public-spirited analysis and innovation.

Inside ChatGPT

Behind each seemingly clever chatbots and art-making computer systems, algorithms and data-manipulation methods flip linguistic and visible knowledge into mathematical objects (like vectors), and mix them in particular methods to supply the desired output. This is how ChatGPT is in a position to answer your questions.

When working with a language, a machine first has to interrupt a sentence or a phrase down into little bits in a course of known as tokenisation. These are the bits that the machine’s data-processing mannequin will work with. For instance, “there’s a star” could be tokenised to “there”, “is”, “a”, and “star”.

There are a number of tokenisation methods. A treebank tokeniser breaks up phrases and sentences primarily based on the guidelines that linguists use to review them. A subword tokeniser permits the mannequin to study some widespread phrase and modifications to that phrase individually, comparable to “dusty” and “dustier”/“dustiest”.

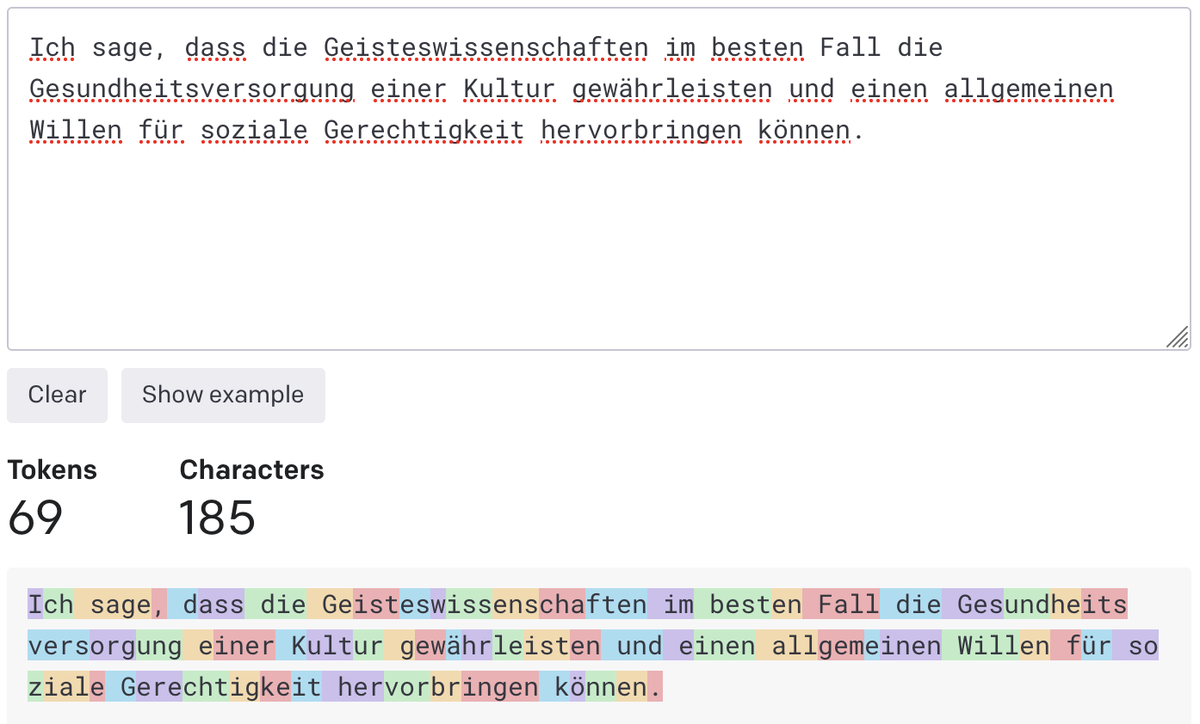

OpenAI, the maker of ChatGPT and the GPT sequence of giant language fashions, makes use of a sort of the subword tokeniser known as byte-pair encoding (BPE). Here’s an instance of the OpenAI API utilizing this on an announcement by Gayathri Chakravorty Spivak:

English v. Hindi

In 2022, Amazon launched a parallel database of 1 million utterances in 52 languages, known as MASSIVE. ‘Parallel’ means the identical utterance is introduced in a number of languages. An utterance could be a easy question or phrase. For instance, no. 38 in the Tamil part is “என்னுடைய திரையின் பிரகாசம் குறைவாக இயங்குகிறதா?” – that means “does my screen’s brightness seem low?”.

On May 3 this yr, AI researcher Yennie Jun mixed the OpenAI API and MASSIVE to analyse how BPE would tokenise 2,033 phrases in the 52 languages.

Ms. Jun discovered that Hindi phrases had been tokenised on common into 4.8x extra tokens than their corresponding English phrases. Similarly, the tokens for Urdu phrases had been 4.4x longer and for Bengali, 5.8x longer. Running a mannequin with extra tokens will increase its operational price and useful resource consumption.

Both GPT and ChatGPT can even admit a hard and fast quantity of enter tokens at a time, which suggests their skill to parse English textual content is best than to parse Hindi, Bengali, Tamil, and so forth. Ms. Jun wrote that this issues as a result of ChatGPT has been extensively adopted “in various applications (from language learning platforms like Duolingo to social media apps like Snapchat),” spotlighting “the importance of understanding tokenisation nuances to ensure equitable language processing across diverse linguistic communities.”

ChatGPT can change

Generally, AI professional Viraj Kulkarni stated, it’s “fairly difficult” for a mannequin educated to work with English to be tailored to work with a language with completely different grammar, like Hindi.

“If you take [an English-based] model and fine-tune it using a Hindi corpus, the model might be able to reuse some of its understanding of English grammar, but it will still need to learn representations of individual Hindi words and the relationships between them,” Dr. Kulkarni, chief knowledge scientist at Pune-based DeepTek AI, stated.

Sentences in completely different languages are thus tokenised in alternative ways, even when they’ve the identical that means. Here’s Dr. Spivak’s assertion in German, due to Google Translate:

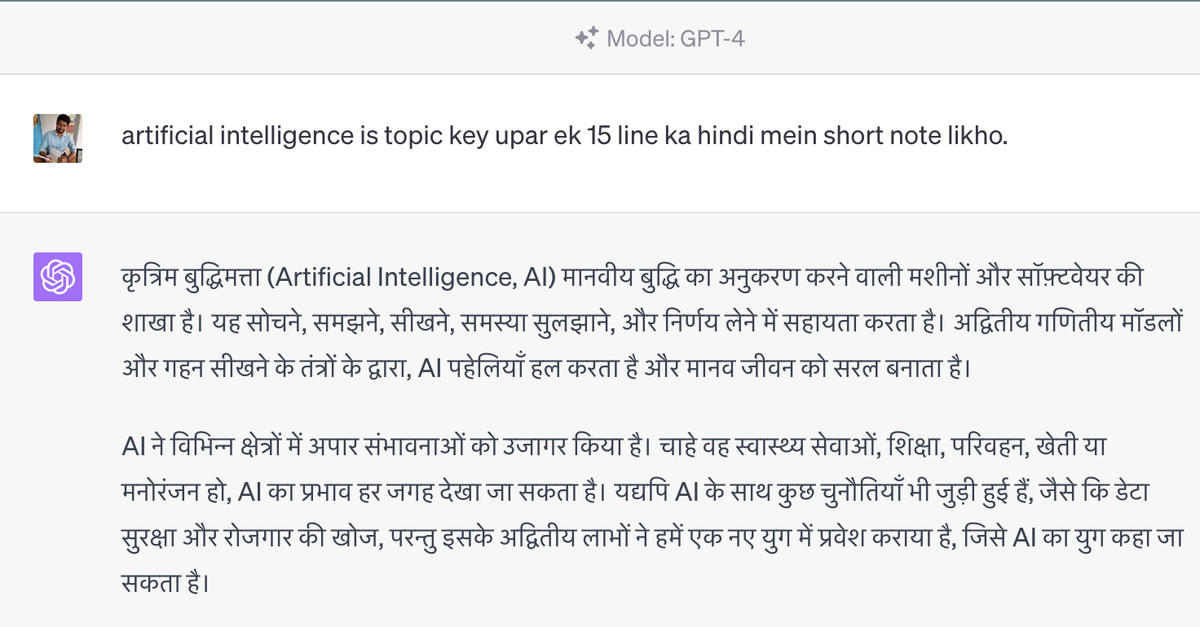

This stated, ChatGPT in explicit doesn’t battle with different languages. According to Dr. Kulkarni, GPT-4, which powers the premium model of ChatGPT, was educated in English in addition to “pretty much most major languages in the world, [so it] has no preference towards English or any other language.”

“It may be better at English than Hindi because it may have seen more English texts than Hindi texts, but it can very fluidly switch between languages,” he added.

Viraj Kulkarni has GPT-4 write a brief observe on AI in Hindi.

| Photo Credit:

Viraj Kulkarni/Special association

But there’s nonetheless a value distinction. In January 2023, machine-learning professional Denys Linkov fed the first 50 utterances in English in MASSIVE to ChatGPT together with a immediate: “Rewrite the following sentence into a friendlier tone”. He repeated the process with the first 50 Malayalam utterances. He discovered that the latter used 15.69-times extra tokens.

OpenAI fees a hard and fast fee to make use of ChatGPT primarily based on the quantity of tokens, so Malayalam was additionally 15.69-times costlier.

More and extra knowledge

This stated, for fashions designed to work with particular person languages, adapting may very well be an issue – as might any language that doesn’t have a lot listed materials obtainable for the mannequin to coach with. This is true of many Indian languages.

“Recent breakthroughs indicate quite unequivocally that the biggest gains in [model] performance come from only two factors: the size of the network, or number of parameters, and the size of the training data,” per Dr. Kulkarni.

A parameter is a method in which phrases could be completely different from one another. For instance, phrase sort (verbs, adjectives, nouns, and so forth.) could be one parameter and tense could be one other. GPT-4 has trillions of parameters. The extra parameters a mannequin has, the higher its talents.

The quantity of coaching knowledge for English is far bigger than that for Indian languages. ChatGPT was educated on textual content scraped from the web – a spot the place some 55% of the content material is in English. The relaxation is all the different languages of the world mixed. Can it suffice?

We don’t know. According to Dr. Kulkarni, there isn’t any identified “minimum size” for a coaching dataset. “The minimum size also depends on the size of the network,” he defined. “A large network is more powerful, can represent more complex functions, and needs more data to reach its maximum performance.”

Data for ‘fine-tuning’

“The availability of text in each language is going to be a long-tail – a few languages with lots of text, many languages with few examples” – and that is going to have an effect on fashions coping with the latter, Makarand Tapaswi, a senior machine studying scientist at Wadhwani AI, a non-profit, and assistant professor at the pc imaginative and prescient group at IIIT Hyderabad, stated.

“For rare languages,” he added, the mannequin may very well be translating the phrases first to English, determining the reply, then translating again to the unique language, “which can also be a source of errors”.

“In addition to next-word prediction tasks, GPT-like models still need some customisation to make sure they can follow natural language instructions, carry out conversations, align with human values, etc.,” Anoop Kunchukuttan, a researcher at Microsoft, stated. “Data for this customisation, called ‘fine-tuning’, needs to be of high quality and is still available mostly in English only. Some of this exists for Indian languages, [while] data for most kinds of complex tasks needs to be created.”

Amazon’s MASSIVE is a step in this route. Others embody Google’s ‘Dakshina’ dataset with scripts for a dozen South Asian languages; and the open-source ‘No Language Left Behind’ programme, to create “datasets and models” that slender “the performance gap between low- and high-resource languages”.

Meet AI4Bharat

In India, AI4Bharat is an IIT Madras initiative that’s “building open-source language AI for Indian languages, including datasets, models, and applications,” in line with its web site.

Dr. Kunchukuttan is a founding-member and co-lead of the Nilekani Centre at AI4Bharat. To prepare language fashions, he stated, AI4Bharat has a corpus known as IndicCorp with 22 Indian languages, and its CommonCrawl website-crawler can help “10-15 Indian languages”.

One half of pure language processing is pure language understanding (NLU) – in which a mannequin works with the that means of a sentence. For instance, when requested “what’s the temperature in Chennai?”, an NLU mannequin may carry out three duties: determine that it is a weather-related (1) query in want of a solution (2) pertaining to a metropolis (3).

In a December 2022 preprint paper (up to date on May 24, 2023), AI4Bharat researchers reported a brand new benchmark for Indian languages known as “IndicXTREME”. They wrote that IndicXTREME has 9 NLU duties for 20 languages in the Eighth Schedule of the Constitution, together with 9 for which there aren’t sufficient assets with which to coach language fashions. It permits researchers to judge the efficiency of fashions which have ‘learnt’ these languages.

Modest-sized fashions

Preparing such instruments is laborious. For instance, on May 25, AI4Bharat publicly launched ‘Bharat Parallel Corpus Collection’, “the largest publicly available parallel corpora for Indic languages”. It incorporates 23 crore text-pairs; of these, 6.44 lakh had been “manually translated”.

These instruments additionally have to account for dialects, stereotypes, slang, and contextual references primarily based on caste, faith, and area.

Computational methods will help ease improvement. In a May 9 preprint paper, an AI4Bharat group addressed “the task of machine translation from an extremely low-resource language to English using cross-lingual transfer from a closely related high-resource language.”

“I think we are at a point where we have data to train modest-sized models for Indian languages, and start experimenting with the directions … mentioned above,” Dr. Kunchukuttan stated. “There has been a spurt in activity of open-source modest-sized models in English, and it indicates that we could build promising models for Indian languages, which becomes a springboard for further innovation.”